How to do robot head dance

How To Robot Dance - Easy Robot Dance Moves

You do not have to be a trained classical dancer to learn the robot dance. The robot dance has two versions - the Michael Jackson one and the “everybody” robot dance. While you may take time to perfect the legendary Michael Jackson moves, you can try your legs and arms at the “everybody” robot dance, for sure. Moreover, with dedicated practice, you can master them in no time. The robot dance, also known as mannequin dance style, demands imitating the dancing motions of robots to come up with a crooked yet cool dance form. With a little patience and constant practice, you can melt hearts on the dance floor and have a good time. So what are you waiting for? Put on some dance music, like “Mr. Roboto” by Styx or “Kyur for Itch” by Linkin Park, and set the stage on fire. Learn some easy robot dance moves by steering through the instructions stated below. Watch all the heads turn as you display some of your coolest robotic dance moves amongst the crowd.

Easy Robot Dance Moves

Set Your Back Taut

Remember, robots have their vertebrae missing and the whole idea behind doing a robot dance is to imitate robotic movements. Thus, the accuracy of your movements will judge how successfully and efficiently have you done your robot dance. Therefore, keeping your back firm and stiff is the backbone of any robot dance move.

Bend Your Arms

Bend both your arms at the elbow forming a 90 degree angle. Maintaining the position, raise one arm at a time, such that the open palm reaches your eye level. The move is one of the signature steps in a robot dance and should resemble a chopping motion. Alternately, raise your arms throughout the dance while maintaining a slow pace.

Pivot Your Head

While raising your arms alternately at a slow pace, swivel your head from right to left, repeating the motion at regular intervals. Make sure that your neck and head are upright and taut to your best possibility. Combined with your arms, this completes the upper-body portion of the robot dance.

Make sure that your neck and head are upright and taut to your best possibility. Combined with your arms, this completes the upper-body portion of the robot dance.

Walk Around

Though it is fine to keep your robot dance stationary, advanced robot dancers prefer to use their legs and move around displaying their skills and talent. To mete such potential robot companions, you can start walking around while performing the upper-body movements. However, maintain your pace in your walking as slow as in your arms and head. As your raise one arm, take a step, keeping your legs bent at the knees in 45 degree angle.

Bend At The Waist

After you have mastered the above steps, reveal your perfection and talent by continuing with this move. Keeping your back taut and stiff, perform all the steps combined together and bend at your waist in a 45 degree angle occasionally. While maintaining the bent position, you can let lose your arm swinging it back and forth like a pendulum. To show your flair and style, end your robot dance with a robotic bow.

To show your flair and style, end your robot dance with a robotic bow.

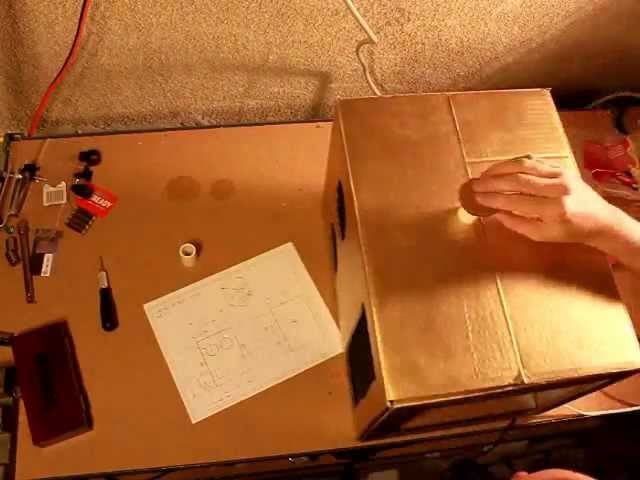

Humanlike Robots Build Trust Through Dance

Twelve white arm-like robots stand on pedestals in a dark room. A pair of human dancers approach one in the middle. The dancers and the robot stare at one another apprehensively. The dancers revolve around the robot as it rotates. Live music starts. More dancers approach, crawling, as the other robots come alive. People and machines move to the rhythm of drums, guitars, a horn. A slow piano melody replaces the other instruments as a lone dancer intertwines with one robot and embraces another. The music picks up and an MC arrives, singing and rapping, as 17 dancers, only five of them human, writhe around him.

This is not just experimental theater. It’s the result of a grant from the National Science Foundation (NSF) awarded to the Center for Music Technology at Georgia Tech. Humans and robots each have their strengths. Where humans are creative and adaptable, robots are consistent and precise. In the best of all worlds, humans and robots will work together, cooperating at home, work, and school. For that to happen, people will need to trust their mechanical partners. And because we’ve evolved to form trust based on certain social cues, robots will need to exhibit those cues.

In the best of all worlds, humans and robots will work together, cooperating at home, work, and school. For that to happen, people will need to trust their mechanical partners. And because we’ve evolved to form trust based on certain social cues, robots will need to exhibit those cues.

Performance of “FOREST,” a collaborative project between Georgia Tech Center for Music Technology and the Kennesaw State University Dance Department. (Video credit: Georgia Tech)

Gil Weinberg, the founding director of the Center for Music Technology, has spent years studying those cues. For this project, he proposed an idea to the NSF to disseminate some of his findings to society. “The best way I came up with to bring it to the public,” he says, “was to have a performance with musicians who make sound and with dancers who use gestures.”

According to Amit Rogel, a graduate student in Weinberg’s group, “People, in general, have a lot of fears about robots. There are many movies about killer robots or evil AIs. Sharing our work, where you can see positive interactions with robots in an artistic way, is important.”

There are many movies about killer robots or evil AIs. Sharing our work, where you can see positive interactions with robots in an artistic way, is important.”

Dance Dance Revolution

The video described above spawns from earlier work in which Weinberg’s group developed a system that can generate emotional sounds for robots in an effort to improve human-robot trust. To create the data set for the system, they invited musicians to convey a set of emotions vocally or through instruments, then trained a neural network—a piece of software inspired by the brain’s wiring—to generate new audio examples for each emotion. They found that when people collaborated with robots on tasks like placing objects into containers, observers trusted the robots more if the machines emitted emotional sounds during the interaction. The next logical step was teaching the robots to convey emotions through gestures.

“If I have the suit on, and I’m talking to another person, you just see the robot doing subtle motions.

Amit Rogel, the Center for Music for Music Technology at Georgia TechFor instance, it bobs its head when I say something more impactful. It’s very surprising and really cool.”

Translating full-body human posture and gesture into a handless robot is not straightforward. Amit looked into psychology research and read up on how humans express themselves through movement. “For example, when people are happy,” he says, “their heads will be pointed up, their arms will go up, they’ll make repetitive up and down movements.” Weinberg’s group used a robot called Franka Emika Panda, featuring seven degrees of freedom at its seven joints. “Head up means joint six is x degrees. A tall position indicates joint four would be y degrees, and then up and down movement would look like this,” he says, moving his body up and down. In one study, they found that people could easily decipher the intended emotions. Participants also rated these animated robots high on likability and perceived intelligence.

Dancers from Kennesaw State University perform with robots from the Georgia Tech Center for Music Technology. (Image credit: Gioconda Barral-Secchi)

Aside from the sleek video, the group also recorded a student performance comprising six different works. Dancers intermingled with a dozen UFACTORY xArms similar to the Panda. For one piece, the robots executed preprogrammed moves in response to music. For another, they reacted to people who manipulated their joints or showed moves to their cameras. They also played off someone’s brain waves. In yet another, they improvised using data from a dancer’s motion-capture (mocap) suit. Lastly, they obeyed rules telling them what to do based on what their robot neighbors were doing.

Rogel enjoys interacting with the robots while wearing the mocap suit. “The mocap suit picks up on really small movements that you don’t even know you’re doing,” he says. “If I have the suit on, and I’m talking to another person, you just see the robot doing subtle motions. For instance, it bobs its head when I say something more impactful. It’s very surprising and really cool.”

For instance, it bobs its head when I say something more impactful. It’s very surprising and really cool.”

Smooth Operator

Rogel programmed the rules for the robots’ movements in MATLAB®. The code tells the robots how to respond to any combination of mocap, cameras, EEG, music, nudges from humans, and the movements of other robots. Rogel controls how the different elements interact using Simulink®, where blocks represent control functions. He can open the blocks and look at the functions. “I’m a mechanical engineer,” he says. “I like seeing the equations and focusing on the math as opposed to lines of software code. In having all the toolboxes readily available, it’s so easy to get all of this done in MATLAB.”

In Simulink, he visualizes the robots’ movement in two different ways. One shows graphs of various points’ acceleration. The other shows each robot as an animated stick figure. “I can test different parameters and look at how it’s responding without ruining any of the robots,” he says. “Or, if I’m doing cool things, I’ll also be able to see it before testing it on the robot. It’s an easy tool for iterative processes.”

“Or, if I’m doing cool things, I’ll also be able to see it before testing it on the robot. It’s an easy tool for iterative processes.”

The raw data from the mocap suit is messy. One of the most useful software features turns movement curves into equations that the team can use to generate new robot movements. Usually, they create fifth-order polynomials—equations with variables raised to the fifth power. They do this so that when they take double-derivatives to convert position into velocity and then acceleration, they still have a third-order polynomial—a smooth curve. Otherwise, the motor’s movement might be herky-jerky, damaging the hardware and looking unnatural.

Another trick involves follow-through. When a person moves one body part, the rest of the body tends to move too. When you wave your hand, for instance, your shoulder adjusts. “We wanted to model this with the robots,” Rogel says, “so that when they dance, it looks smooth, like a fluid motion, and elegant.” The team uses tools that can locate the peak of a curve—one point’s maximum acceleration—and then alter another point’s curve to be offset by a certain amount of time.

Ivan Pulinkala, a choreographer at nearby Kennesaw State University who choreographed the sleek video with the MC, sometimes looked over Rogel’s shoulder, suggesting prompts. He would describe the undulation of the human spine or propose that the robots appear to be breathing. “The part that I found exciting is that it completely changed my approach to choreography and the approach of the dancers”—also affiliated with Kennesaw—“to movement,” Pulinkala says.

In preparation for one section of the videotaped piece, the robots improvised, and then repeated this improvisation so it could be filmed from different angles for the video. To do this, Rogel trained a neural network in MATLAB on one dancer’s performance in order to generate new robotic movements in that dancer’s style.

“One of the biggest challenges was how to make the movement smooth and flowing like human movements,” Weinberg says. “I thought it would be tricky because these robots are not designed for dance. Amit did wonders with them. Look at the student video from the end of the semester. You’ll see how the robots are really grooving with the humans. Otherwise, we might have been falling into the uncanny valley, where the robots look eerie.”

Look at the student video from the end of the semester. You’ll see how the robots are really grooving with the humans. Otherwise, we might have been falling into the uncanny valley, where the robots look eerie.”

“One of the biggest challenges was how to make the movement smooth and flowing like human movements. I thought it would be tricky because these robots are not designed for dance. Amit did wonders with them. You’ll see how the robots are really grooving with the humans.”

Gil Weinberg, director, the Center for Music Technology at Georgia Tech

Amit Rogel (foreground, right), a Georgia Tech Center for Music Technology graduate student, works with other “FOREST” researchers (left to right) Mohammad Jafar, Michael Verma, and Rose Sun. (Image credit: Allison Carter, Georgia Tech)

Symbiosis

“I was happily surprised by the affection the Kennesaw dancers formed toward our robots,” Weinberg says. “It was a long shot because they came from a completely different world. Before the workshop, they probably perceived robots as just mechanical tools.”

“It was a long shot because they came from a completely different world. Before the workshop, they probably perceived robots as just mechanical tools.”

“Instead of dancing around them, I’m actually dancing with them. It definitely felt like they were dancing alongside with us.”

Christina Massad, Kennesaw State University

Describing the shift in her thinking, one dancer, Christina Massad, told the Atlanta NPR station, “Instead of dancing around them, I’m actually dancing with them. It definitely felt like they were dancing alongside with us.”

Rogel used MATLAB to tune how sensitive the robots’ joints were to contact. “We did want the dancers to be able to get up close and touch the robots and really establish connections,” he says. “At the beginning, they were very timid and shy around them. But the first time they would bump into a robot while it was moving toward them, the human would be safe, the robot would be safe, and that felt a lot more real to them. ”

”

Dancers get up close and establish a connection with the robots. (Image credit: Georgia Tech)

Independent of contact, a robot would occasionally break down. “The dancers really like that,” Rogel says, “because it also felt more human, like they’re getting tired.” The team also used some tricks to make the robots seem more alive, such as giving them eyes, names, and backstories.

The team has additional experiments in mind. They’d like to further probe people’s trust in response to robotic gestures. They’re also looking at emotional contagion—how people want machines to respond to their own emotions—and the role of people’s personalities; some might prefer different kinds of reactions. In terms of further communicating their insights, they plan to take “FOREST” on tour. Pulinkala hopes to develop a longer show.

One thing that sets “FOREST” apart from other investigations of human-computer interaction is its collection of multiple robots. Weinberg calls it both a technical and a social challenge. “The idea was to use forests as a metaphor. Inspired by the biodiversity in forests, we attempted to bring together a wide set of robots and humans that can play, dance, and influence each other. The music was diverse as well, featuring a mashup of many different genres such as Middle Eastern music, electronic dance, hip hop, classical, reggae, and Carnatic music, among others.”

“The idea was to use forests as a metaphor. Inspired by the biodiversity in forests, we attempted to bring together a wide set of robots and humans that can play, dance, and influence each other. The music was diverse as well, featuring a mashup of many different genres such as Middle Eastern music, electronic dance, hip hop, classical, reggae, and Carnatic music, among others.”

The humans behind the project were also diverse. “The team comprised students, professors and administrators, musicians, engineers, dancers, and choreographers. The team also had a wide representation of gender, race, nationality, and backgrounds. I think that we were able to create something unique. Everyone learned something.” Weinberg continues, describing the larger mission behind the Center for Music Technology. “I’m a big believer in diversity, which is challenging to achieve at technical universities like Georgia Tech.” Georgia Tech doesn’t have a dance program, for instance. “You may have dancers who are also good at computer science but don’t find a way to combine both passions,” he says. “And this kind of project allows them to combine the best of both worlds.”

“And this kind of project allows them to combine the best of both worlds.”

The founder of Boston Dynamics told how he taught robots to dance

Anastasia Nikiforova news editor

Creator of some of the world's most advanced dynamic robots, Boston Dynamics had the daunting task of programming them to dance to the beat, combining smooth, explosive, and human-like expressive movements. Details about how the robots were taught to dance were told by company founder Mark Raibert in an interview with The Associated Press. nine0003

Read Hi-Tech in

It took a year and a half of lessons in choreography, modeling, programming and updates to teach the robots to dance almost like people - smoothly, but energetically and in time. The now-iconic clip of robots dancing to the 1962 hit "Do You Love Me?" The Contours filmed in just two days. The video, less than 3 minutes long, became an instant hit on social media. In one week, the clip collected more than 23 million views.

The video, less than 3 minutes long, became an instant hit on social media. In one week, the clip collected more than 23 million views.

The video features two Atlas humanoid explorer robots from Boston Dynamics dancing, joined by Spot, a dog robot, and Handle, a wheeled robot designed to lift and move crates in a warehouse or truck. nine0003

Boston Dynamics founder Mark Raibert called the experience of teaching robots dance very valuable.

The daunting task of teaching robots to dance prompted Boston Dynamics engineers to develop better motion programming tools that would allow robots to coordinate balance, jump, and dance steps at the same time.

“It turned out that we needed to upgrade the robots in the middle of development. To dance non-stop, they needed more energy and 'strength', Raibert explains. “Therefore, we moved from very crude tools to efficient ones with the ability to generate quickly.” nine0003

The robot dances were so good that some online viewers said they couldn't believe their eyes. Some applauded the movements of the robots and the technology they are built on.

Some applauded the movements of the robots and the technology they are built on.

“We didn't want the robot to dance like a robot. Our goal was to create a robot that would dance with people, like a person himself, to the rhythm of music, to which all parts of the body obey - arms, legs, torso, head. I think we succeeded,” concludes the founder of Boston Dynamics. nine0003

Read more

Abortion and science: what will happen to the children who are born

A third of those who recover from COVID-19 return to the hospital. Every eighth one dies

A plant has been named that is not afraid of climate change. It feeds a billion people

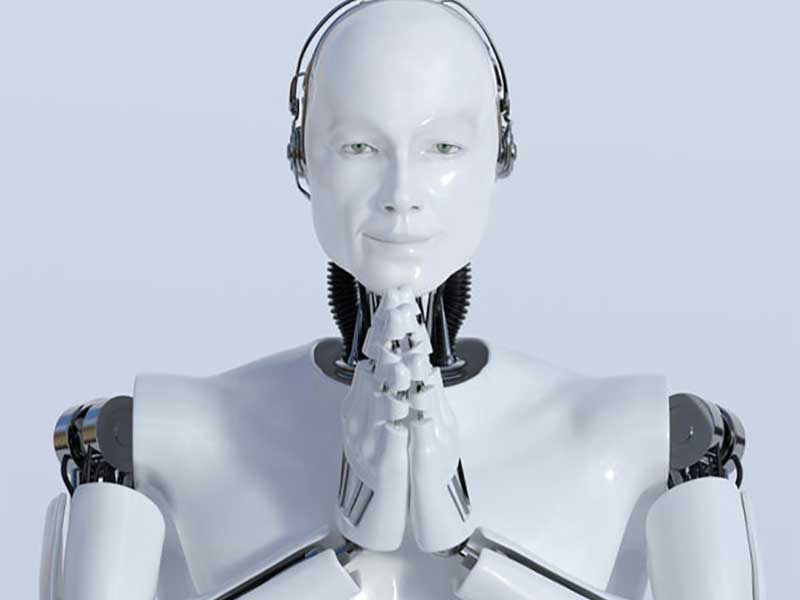

Humanoid robots

Anthropomorphic, i.e. outwardly similar to humans, robots are usually divided into androids (anthropomorphic robots with a high degree of external resemblance to humans) and humanoids (outwardly humanoid). nine0003

nine0003

As a rule, such robots have similar proportions, have a "head", possibly arms, less often legs. The robot is not necessarily "walking", it can be stationary or mobile, such as wheeled or tracked. But "human features" must be read, such a robot usually has cameras mounted on its head.

Russian humanoid robots0027

Robot cashier "Marusya", Alfa Robotics, Russia

2018.09.05 A robot cashier is being tested in the Teremok restaurant. Robot "Marusya" (modification KIKI, AlfaRobotics).

Android technology (NPO Androidnaya Tekhnika), Moscow

AR-601, Android technology (NPO Androidnaya Tekhnika), Moscow

AR-601 (or AR-600E), 03.010E

Don State Technical University, Rostov-on-Don

WayBot, Don State Technical University, Rostov-on-Don

photo source: vedomosti.ru, 2018.09

Robot-assistant project that automates the process of interaction with customers: data collection, informing, registration in systems electronic queues, administration. It can replace a person in situations of payment for services, consultations, excursions, assistance in navigation, printing tickets, photos.

It can replace a person in situations of payment for services, consultations, excursions, assistance in navigation, printing tickets, photos.

Center for the Development of Robotics at the Foundation for Advanced Study

FEDOR (Final Experimental Demonstration Object Research), Center for the Development of Robotics at the Foundation for Advanced Study

Remote-controlled avatar suit (motion capture suit) space robot (formerly known as Project Avatar) . Perhaps it will have some autonomy. Readiness is expected by 2021. In 2016 - at the prototype level. So far, the degree of autonomy of this product is unclear.

2022.12 Introduced in August 2022, the Xiaomi CyberOne robot showed its abilities as a drummer. The height of the robot is 177 cm, weight - 52 kg. This is not a commercial, but a research project. Unlike other projects, this is just a demonstration of the capabilities of the robot, and not a musical project.

Sanbot, China

Humanoid infobot. Equipped with a speech recognition, analysis and synthesis system. Autonomy - up to 4 hours. Can dance. Can display photos and videos. The issue volume is less than a thousand. Applications: restaurants, hospitals, shopping malls, schools. nine0003

At the beginning of 2018, it is available in three versions that differ in form factor and size - from 90 cm (Sanbot Nano) to 1.5 m.

UBTech, China

Alpha1 PRO UBTech, China

Programmable robot for children (from 8 years old). In Russia, it is represented by the exclusive dealer of UBTech - Graphitek.

2017.08.25 UBTech Alpha1 Pro - programmable robot for children from 8 to 150.

Alpha 2

As of 2015. 11 in development, orders are open as part of the crowdfunding fundraising program.

11 in development, orders are open as part of the crowdfunding fundraising program.

Manufacturer unknown, China

Xian'er, China

Monk robot, 60 cm tall, wheel drive, speech interface and touchscreen, support for simplified voice communication. Development of 2015. The robot can be seen in a Buddhist temple in Beijing, Longquan Temple. The robot is trained to answer questions using answers compiled by temple masters. Robot on a three-wheeled mobile platform. nine0003

2016.04.24 A robot monk appeared in a Buddhist temple in China.

USA

NASA/DARPA, USA

R5 Valkyrie, NASA/DARPA, USA

R5 shows improved balance

Anthropomorphic robot for use in space, on the Moon, on Mars. 1.8 m, 131.5 kg. With two legs and the ability to walk. Two manipulators in the form of hands. Designed for use on board the spacecraft. Remote controlled. As of 2015.11 in development. nine0003

Remote controlled. As of 2015.11 in development. nine0003

Oregon State University, USA

ATRIAS, Oregon State University, USA

Under development as of 2015.05. Platform for working out the mechanism of bipedal (bipedal) walking.

Oussama Khatib and Stanford University, USA

OceanOne, Oussama Khatib and Stanford University, USA

manipulators. The scuba diving robot receives commands from the operator on the surface via a cable - the avatar system controls the robot's manipulators, repeating the movements of the operator's hands. nine0003

Japan

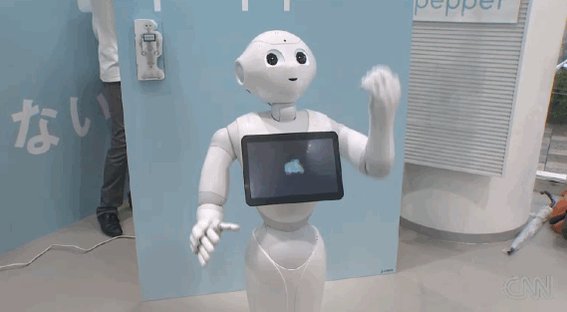

Aldebaran Robotics, France (Japan)

NAO, Aldebaran Robotics, France (Japan)

NAO h3 Next 58 cm tall anthropomorphic domestic robot. Companion, assistant or research platform (STEM). Since 2012.

South Korea

Boston Dynamics, originally USA, South Korea

Atlas, Boston Dynamics, USA

2016. 09.12 Atlas was taught to balance on one leg.

09.12 Atlas was taught to balance on one leg.

2016.02.24 The new generation of the Google

Japan

Honda, Japan

ASIMO, Japanese 9000

9000 android type, capable of Android. It is noted that on 2016.03 a wonderful prototype has not turned into a commercially available product.

Tokio University, Japan

Kengoro, Tokio University, Japan

A bipedal robot that can walk and even do push-ups. More than 100 electric motors and other actuators. The main feature - the robot can "sweat", which allows it to deal with overheating associated with a high density of electric motors and actuators. To do this, the robot needs to replenish the supply of water.

Manufacturer unknown, Japan

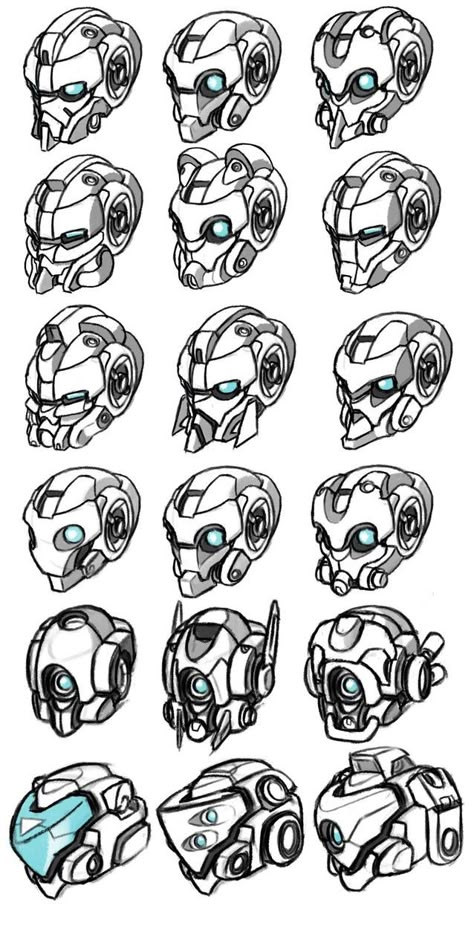

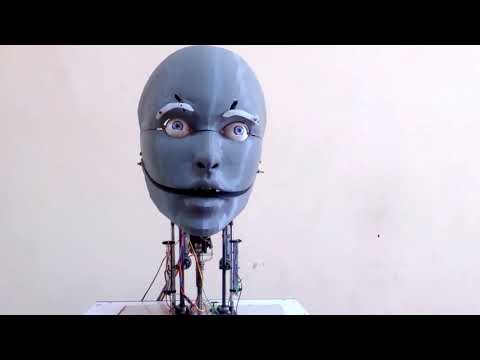

SEER, Japan

Designed by engineer Takayuki Todo. It's just a "robot head". Introduced in 2018. The head can both recognize the facial expression of the interlocutor and express emotions on its own face. Unlike androids with high human similarity, SEER does not have many facial expression actuators, however, a certain human similarity is achieved. The eyes have two degrees of freedom, in addition, the eyebrows also move - a special mechanism is responsible for this. The mouth is still motionless and has no lips. The author plans to add lip automation in the course of further development. In general, the eyes look natural, except for a noticeable lack of eye focusing effect. Eyebrows do not look natural, but they convey emotions well. Head movements look unnatural. Video, April 2018.

Introduced in 2018. The head can both recognize the facial expression of the interlocutor and express emotions on its own face. Unlike androids with high human similarity, SEER does not have many facial expression actuators, however, a certain human similarity is achieved. The eyes have two degrees of freedom, in addition, the eyebrows also move - a special mechanism is responsible for this. The mouth is still motionless and has no lips. The author plans to add lip automation in the course of further development. In general, the eyes look natural, except for a noticeable lack of eye focusing effect. Eyebrows do not look natural, but they convey emotions well. Head movements look unnatural. Video, April 2018.

2018.08.17 The Japanese "robot head" SEER is another attempt to emulate facial expressions.

News

2018.09.05 A cashier robot is being tested in the Teremok restaurant. Robot "Marusya" (modification KIKI, AlfaRobotics).

2018.08.17 The Japanese "robot head" SEER is another attempt to emulate facial expressions.